Fox Corp. made ripples in media circles on Tuesday when it introduced that it was launching “Confirm,” a brand new blockchain-based instrument for verifying the authenticity of digital media within the age of AI.

The challenge addresses a pair of more and more nettlesome issues: AI is making it simpler for “deepfake” content material to spring up and mislead readers, and publishers are often discovering that their content material has been used to coach AI fashions with out permission.

A cynical take may be that that is all only a large public relations transfer. Stirring “AI” and “Blockchain” collectively right into a buzzword stew to assist construct “belief in information” seems like nice press fodder, particularly if you happen to’re an growing old media conglomerate with credibility points. We have all seen Succession, have not we?

However let’s set the irony apart for a second and take Fox and its new instrument critically. On the deep-fake finish, Fox says folks can load URLs and pictures into the Confirm system to find out in the event that they’re genuine, which means a writer has added them to the Confirm database. On the licensing finish, AI corporations can use the Confirm database to entry (and pay for) content material in a compliant method.

Blockchain Inventive Labs, Fox’s in-house know-how arm, partnered with Polygon, the low-fee, high-throughput blockchain that works atop the sprawling Ethereum community, to energy issues behind the scenes. Including new content material to Confirm primarily means including an entry to a database on the Polygon blockchain, the place its metadata and different data are saved.

In contrast to so many different crypto experiments, the blockchain tie-in may need some extent this time round: Polygon provides content material on Confirm an immutable audit path, and it ensures that third-party publishers need not belief Fox to steward their knowledge.

Confirm in its present state feels a bit like a glorified database checker, a easy net app that makes use of Polygon’s tech to maintain observe of photos and URLs. However that does not imply it is ineffective – notably on the subject of serving to legacy publishers navigate licensing offers on this planet of huge language fashions.

Confirm for Customers

We went forward and uploaded some content material into Confirm’s net app to see how nicely it really works in day-to-day use, and it did not take us lengthy to note the app’s limitations for the buyer use case.

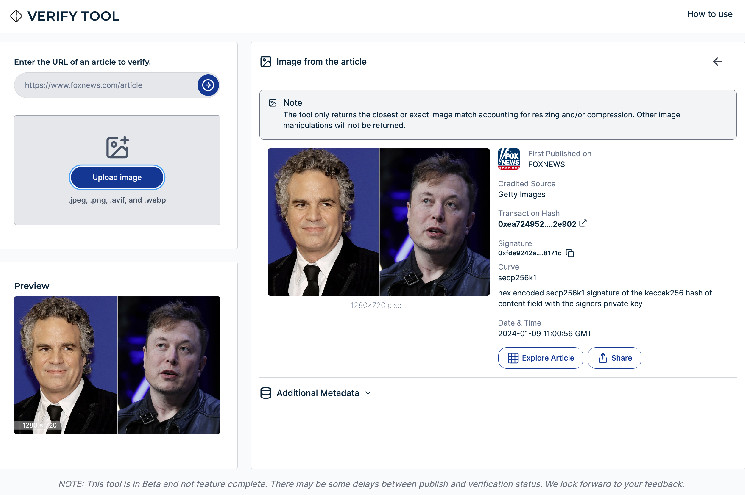

The Confirm app has a textual content enter field for URLs. After we pasted in a Fox Information article from Tuesday about Elon Musk and deep fakes (which occurred to be featured prominently on the location) and pressed “enter,” a bunch of data popped up testifying to the article’s provenance. Together with a transaction hash and signature – knowledge for the Polygon blockchain transaction representing the piece of content material – the Confirm app additionally confirmed the article’s related metadata, licensing data, and a set of photos that seem within the content material.

We then downloaded and re-uploaded a kind of photos into the instrument to see if it might be verified. After we did, we had been proven comparable knowledge to what we noticed once we inputted the URL. (After we tried one other picture, we might additionally click on on a hyperlink to see different Fox articles that the picture had been utilized in. Cool!)

Whereas Confirm achieved these easy duties as marketed, it is laborious to think about many individuals might want to “confirm” the supply of content material that they lifted straight from the Fox Information web site.

In its documentation, Confirm suggests {that a} potential person of the service may be an individual who comes throughout an article on social media and desires to determine whether or not it’s from a putative supply. After we ran Confirm by means of this real-world situation, we bumped into points.

We discovered an official Fox Information put up on X (the platform previously often known as Twitter) that includes the identical article that we verified initially, and we then uploaded X’s model of the article’s URL into Confirm. Despite the fact that clicking on the X hyperlink lands one straight onto the identical Fox Information web page that we checked initially – and Confirm was in a position to pull up a preview of the article – Confirm wasn’t in a position to inform us if the article was genuine this time round.

We then screen-grabbed the thumbnail picture from the Fox Information put up: one of many similar Fox photos that we uploaded final time round. This time, we had been informed that the picture could not be authenticated. It seems that if a picture is manipulated in any method – which incorporates slightly-cropped thumbnails, or screenshots whose dimensions aren’t precisely proper – the Confirm app will get confused.

A few of these technical shortcomings will certainly be ironed out, however there are much more difficult engineering issues that Fox might want to deal with if it hopes to assist customers suss out AI-generated content material.

Even when Confirm is working as marketed, it may’t let you know whether or not the content material was AI-generated – solely that it got here from Fox (or from no matter different supply uploaded it, presuming different publishers use Confirm sooner or later). If the purpose is to assist customers discern AI-generated content material from human content material, this does not assist. Even trusted information shops like Sports activities Illustrated have grow to be embroiled in controversy for utilizing AI-generated content material.

Then there’s the issue of person apathy. Folks have a tendency to not care a lot about whether or not what they’re studying is true, as Fox is unquestionably conscious. That is very true when folks need one thing to be true.

For one thing like Confirm to be helpful for customers, one imagines it’s going to have to be constructed straight into the instruments that folks use to view content material, like net browsers and social media platforms. You could possibly think about a kind of badge, à la neighborhood notes, that reveals up on content material that is been added to the Confirm database.

Confirm for Publishers

It feels unfair to rag on this barebones model of Confirm provided that Fox was fairly proactive in labeling it as beta. Fox additionally is not solely centered on common media customers, as now we have been in our testing.

Fox’s accomplice, Polygon, mentioned in a press launch shared with CoinDesk that “Confirm establishes a technical bridge between media corporations and AI platforms” and has extra options to assist create “new industrial alternatives for content material house owners by using sensible contracts to set programmatic circumstances for entry to content material.”

Whereas the specifics listed below are considerably obscure, the thought appears to be that Confirm will function a kind of world database for AI platforms that scrape the online for information content material – offering a method for AI platforms to glean authenticity and for publishers to gate their content material behind licensing restrictions and paywalls.

Confirm would most likely want buy-in from a vital mass of publishers and AI corporations for this kind of factor to work; for now, the database simply contains round 90,000 articles from Fox-owned publishers together with Fox Information and Fox Sports activities. The corporate additionally says it has opened the door for different publishers so as to add content material to the Confirm database, and it has additionally open-sourced its code to those that need to create new platforms based mostly on its tech.

Even in its present state, the licensing use case for Confirm looks as if a strong thought – notably in gentle of the thorny authorized questions that publishers and AI corporations are at the moment reckoning with.

In a not too long ago filed lawsuit in opposition to OpenAI and Microsoft, the New York Occasions has alleged its content material was used with out permission to coach AI fashions. Confirm might present an ordinary framework for AI corporations to entry on-line content material, thereby giving information publishers one thing of an higher hand of their negotiations with AI corporations.